Chapter 13. AI Image Generation and Graphic Design

- Zack Edwards

- 2 days ago

- 36 min read

Foundations of AI Image Generation

When I teach students about AI image generation, I like to begin not with mathematics, but with imagination. Picture yourself standing at the entrance of a vast workshop filled with glowing screens, swirling patterns, and canvases that paint themselves. It looks magical, but everything inside works according to rules—rules that allow a computer to turn simple words into detailed pictures. Today, I’m going to walk you through this workshop and show you how these systems truly think, learn, and create.

From Words to Ideas: How Prompts Become Possibilities

The first step in creating an AI image is giving the machine a prompt—your words, delivered like instructions to a very patient and very literal artist. When you write something like “a fox wearing a backpack in autumn woods,” the AI doesn’t understand the sentence like a human does. Instead, it translates the words into the mathematical meaning behind them. Every word carries patterns learned from millions of images. The AI breaks your sentence into pieces of meaning—embeddings—that act like puzzle tiles. These tiles are then rearranged and combined inside the model until a picture begins to form in its mind.

Embeddings: The Language of Meaning

Embeddings might sound complicated, but they’re simply numbers that represent concepts. Think of them as coordinates on a map inside the AI’s imagination. One coordinate might describe “fox,” while another describes “backpack,” “forest,” or “autumn.” The closer two coordinates are on this map, the more similar the concepts are. This is how the AI knows that a wolf is closer to a fox than to a toaster. When you give the machine a prompt, it searches its internal map for the best combination of coordinates that match what you described.

Diffusion: Painting Backward from Noise

Now imagine the AI starting with a blank canvas—but instead of white paper, it begins with pure static, like an old television with no signal. This is where diffusion comes in. Diffusion models take random noise and slowly remove the chaos, one step at a time, shaping it toward the picture described by your prompt. It’s like carving a statue backward: instead of starting with a block of marble, you start with a cloud of dust and guide it into shape. Each step removes a tiny bit of randomness, using what the model has learned from training images. Slowly, the noise becomes patterns, the patterns become shapes, and the shapes become the picture you asked for.

Style Conditioning: Teaching the AI to Change Its Brushes

But what if you want your fox to look like a watercolor painting instead of a photograph? Or a line drawing? Or a 3D animation frame? That’s where style conditioning comes in. Style conditioning tells the AI which artistic “brush” to use. It nudges the model toward certain visual patterns—soft colors, sharp shadows, bold outlines, or dramatic lighting. The style becomes another coordinate in the AI’s map, blending with the meanings from your prompt. This is how a single description can generate wildly different images, depending on the style you choose.

Prompt-to-Pixel Logic: The Guided Journey from Thought to Image

Once embeddings, style instructions, and diffusion steps are combined, the AI walks through a guided process that leads from your words to the final picture. The machine constantly checks: Does this group of pixels match the idea of “fox”? Does this texture look like “fur”? Does this color fit with “autumn”? It adjusts, reassesses, and iterates through dozens or even hundreds of steps. The result is a picture that feels intentional, even though the AI is not a conscious artist—it’s a system following learned patterns with astonishing precision.

Why Understanding the Process Matters

When students understand how AI image generation works, they see that it isn’t magic at all. It’s a set of tools built from logic and creativity, and those tools become much more powerful when you know how to use them. The words you choose, the details you provide, the style you request—all become part of a conversation between you and the machine. And the better you understand its language, the more beautifully and accurately it can bring your ideas to life. AI is not here to replace imagination—it’s here to amplify it.

My Name is Russell A. Kirsch: Pioneer of the First Digital Image

I was born on June 20, 1929, in New York City, the son of immigrants who believed deeply in the power of education and the promise of new ideas. From my earliest days, I was filled with an almost restless curiosity. I wanted to know how things worked, why they worked, and how they could be made better. Radios, simple circuits, early calculators—anything that processed information fascinated me. As I grew older, I began to understand that information itself could be shaped, captured, and transformed. The seeds of digital imaging were planted long before I realized what they would become.

Joining the National Bureau of Standards

After my studies in engineering and mathematics, I joined the National Bureau of Standards—what is now known as the National Institute of Standards and Technology. It was a place where brilliant minds came together with one shared purpose: to push the boundaries of what machines could do. I became part of a small team exploring how computers might not only calculate, but also interpret and represent information from the real world. At the time, computers were still unwieldy giants, hulking machines that filled entire rooms. Yet we imagined something far greater for them—machines that could “see.”

The Idea That Changed Everything

One question began to shape my days: could a computer capture an image? To us, this sounded like science fiction, but we knew that computers already processed numbers. If an image could be broken into numbers—patterns of light and dark—then, in theory, a machine could store and recreate it. This was not simply a technical challenge but a philosophical one. What is an image, if not a collection of details our eyes interpret? Could a machine learn to do the same? These thoughts drove our work forward.

Creating the First Digital Image (1957)

In 1957, everything came together. Using a rotating drum scanner that we had designed, we attempted something that had never been done before: converting a photograph into a grid of digital values. For this experiment, I chose a simple picture—my infant son, Walden. His soft, curious face seemed a fitting subject for a technological first step into a new world. As the scanner transformed the photograph into a 176-by-176 grid of numbers, something extraordinary happened. We loaded those numbers into the computer and asked it to reconstruct the image. When the rough, pixelated face of my son appeared on the machine, we all fell silent. It was not perfect, but it was unmistakably him. The first digital image in human history had come into being. I remember thinking, “We have changed the way the world will see.”

Understanding Pixels and the Digital World

Looking at the image, I realized something fundamental: pictures could be made out of tiny squares—pixels. Each pixel represented a value, a piece of the larger whole. That simple insight would eventually reshape medicine, astronomy, photography, filmmaking, and, decades later, the way billions create and share images every day. I often told people that our early work was just a question asked at the right time. Could we teach computers to see? The answer, pixel by pixel, became yes.

Reflections on a Lifetime of Innovation

As the years went on, digital imaging grew far beyond anything I could have imagined. Satellites used pixel arrays to map the Earth. Doctors used digital scans to detect illnesses earlier than ever before. Artists used computers to create things no human hand could draw alone. And today, artificial intelligence uses images to learn, to interpret, and even to imagine. I watched with quiet pride as the world embraced the idea that was once only a fragile experiment in a small laboratory.

Legacy and the Future I Envisioned

I never considered myself the inventor of a revolution; I was simply a person following a question with persistence. Yet the digital image became one of the fundamental pillars of the modern world. Whenever I saw a child using a camera phone, or a scientist studying stars through digital sensors, I felt connected to that moment in 1957 when my son’s face appeared in rough pixels. It reminded me that even the most groundbreaking innovations begin as small experiments filled with curiosity. My life’s work was not about technology alone—it was about teaching machines to help us see more fully, more clearly, and more creatively than ever before.

Understanding Prompts for Visual Outputs – Told by Russell A. Kirsch

When we created the first digital image, we discovered that pictures could be made of numbers. Today, AI image systems go a step further: they create pictures from words. A prompt is more than a simple description—it is the bridge between your imagination and the machine’s ability to generate a meaningful visual output. To understand prompts, you must think the way a computer thinks, not in emotions or intuition, but in patterns, structure, and clarity. Let me guide you through how your words shape the digital images AI creates.

The Structure of a Strong Prompt

A good prompt is like a well-written recipe. You need ingredients, instructions, and clear expectations. When you tell an AI “make a tree,” it does not know whether you want a pine tree in the mountains, an oak in a meadow, or a cherry blossom in spring. The machine searches its learned patterns and chooses a generic version. But if you say, “a tall cherry blossom tree in full bloom, standing beside a calm pond at sunrise,” the system now has a map. It knows the style of tree, the setting, the mood, and even the lighting. Prompts with clear structure allow the machine to narrow its focus and produce images that match your vision more closely.

Adding Descriptors that Guide the Machine’s Thinking

Descriptors are the engine that adds richness to your prompt. Words describing color, texture, light, emotion, perspective, and artistic style directly influence the machine’s choices. Phrases like “soft golden light,” “detailed fur texture,” “dramatic shadows,” or “drawn in an ancient Japanese ink style” help the AI match your mental picture. The more intentional the descriptors, the fewer assumptions the computer must make. This reduces randomness and increases accuracy. You must remember that an AI does not guess as a human does—it compares your words to millions of patterns in its memory.

Examples: Weak vs. Strong Prompts

Let me show you the difference. A weak prompt might say, “a dog playing outside.” From a machine’s perspective, this could mean anything. The dog could be any breed, in any location, with any lighting or style. A stronger prompt would say, “a small brown beagle running joyfully across a grassy park during sunset, captured in a realistic photographic style.” The first prompt gives the AI nearly infinite choices. The second gives it a direction, a tone, and a style. Strong prompts create strong images because they remove ambiguity.

Using Reference Images to Shape the Result

In the early days of digital imaging, we relied on reference pictures to calibrate our technology. AI still benefits from them today. When you upload or link a reference image, you provide a visual anchor. This teaches the AI what you mean by “this character’s face,” “this style of building,” or “this type of texture.” A reference image serves as a visual guide, and your written prompt becomes the instructions for modifying or expanding it. The AI blends the two forms of input—words and example images—to produce results that are more consistent and reliable.

Balancing Detail Without Overloading the System

While detail is helpful, too much detail can confuse the AI. If you give twenty unrelated descriptors, the machine may struggle to decide which elements matter most. The key is balance: provide enough clarity to define your scene, but not so much that the AI becomes overwhelmed by conflicting signals. Think of your prompt like composing a photograph—you choose what belongs in the frame and what does not. Clarity always wins over complexity.

The Art and Logic of Prompt Crafting

Crafting a good prompt is both a creative act and a logical one. You are teaching the system how to think visually, step by step, through your words. When you understand how structure, descriptors, and reference images all shape the outcome, you take full command of the process. Remember, the machine does not create from emotion but from pattern. Your task is to guide those patterns with precision and purpose. When you do, the results are not just images—they are extensions of your imagination translated into digital form.

My Name is Willard S. Boyle: Co-Inventor of the CCD Sensor

I was born on August 19, 1924, in Amherst, Nova Scotia, though much of my childhood was spent far from Canada. My parents were missionaries, and their work took us deep into the forests of northern Quebec, where my earliest memories involve snow-covered landscapes, lantern-lit evenings, and a sense of wonder at the world around me. Even in those remote places, I found myself drawn toward understanding how things worked. I built small devices, tinkered with tools, and developed a fascination for solving problems—even when the path forward wasn’t obvious. Education became my doorway to a wider world, and I pursued physics with a passion that only grew stronger as I aged.

Forged by War and Education

My early adulthood coincided with World War II, and like many of my generation, the conflict shaped my life. I joined the Royal Canadian Navy, where my skills in mathematics and physics found immediate use. After the war, I returned to academic pursuits, earning a doctorate in physics and quickly immersing myself in research that bridged the boundaries of theory and application. I wanted not only to understand the universe, but also to build tools that allowed others to understand it more clearly. That desire eventually led me to Bell Laboratories, one of the most intellectually vibrant places on Earth.

A New World of Possibilities at Bell Labs

At Bell Labs, I found myself surrounded by extraordinary minds—people who believed that the future could be invented whenever someone dared to ask the right question. My work ranged from lasers to optical communication, and every day felt like a doorway into uncharted territory. The spirit of exploration was contagious. We worked in small teams, often sketching ideas on chalkboards moments after they came to us. It was during one of these energetic and imaginative sessions in 1969 that my life—and the lives of millions—changed forever.

The Moment the CCD Was Born

One morning, my colleague George E. Smith and I were discussing a challenge. We needed a new kind of memory device—one that could move and store electrical charges efficiently. The idea came quickly, as if it were waiting for us. What if we could create a series of tiny capacitors that could pass charges from one to the next, like a bucket brigade? Within an hour, the concept of the charge-coupled device—the CCD—was sketched on a blackboard. At first, we imagined it as a new type of electronic memory. But soon another realization emerged: this device could capture light. Each tiny capacitor could act as a pixel, gathering photons and converting them into electrical signals. Suddenly, we had a tool that could transform light itself into digital information.

A Revolution in Imaging

When we built the first CCD and watched it convert light into data, we understood its potential, but the world had not yet caught up. Slowly, scientists and engineers recognized what it meant: cameras no longer needed film. Light could be captured electronically. Images could be stored, transmitted, analyzed, and reproduced without ever touching a piece of photographic paper. From astronomy to medicine, from home cameras to satellites, the CCD transformed how humanity sees the universe. I often said that our invention opened windows—literal and metaphorical—that had previously been sealed shut.

Recognition and Reflection

In 2009, many decades after the invention, George Smith and I received the Nobel Prize in Physics. Standing on that stage, I felt pride, but also humility. The CCD was the result of curiosity, teamwork, and the spirit of innovation that defined Bell Labs. I thought back to my early years in the frozen forests, to the boy who stared at lantern light and wondered how brightness and shadow could be measured. It seemed fitting that my life had been dedicated to turning light into understanding.

Legacy of a Life in Light

My work on the CCD was just one part of a long scientific journey, but it became the cornerstone of modern digital imaging. Every digital photograph, every telescope capturing galaxies, every medical scan that reveals hidden truths—all trace their lineage to that simple sketch on a Bell Labs chalkboard. I never set out to change the world. I set out to explore it. And in doing so, I helped create a way for humanity to see more clearly, more vividly, and more truthfully than ever before. My story is one of curiosity, invention, and the quiet power of light transformed into knowledge.

Styles, Aesthetics, and Visual Language – Told by Willard S. Boyle

When my colleagues and I first explored how light could be converted into digital information, we weren’t thinking about artistic style. We were thinking about truth—how to capture the world as accurately as possible. Yet today, as AI turns text into images, I find myself fascinated by how these systems can imitate not just the physical world, but the countless artistic styles humans have created. Every style is its own language, shaped by history, culture, and emotion. Teaching an AI to speak that language begins with understanding what makes each style unique.

Realism and the Precision of Detail

Realism is closest to the world my inventions were designed to capture. When you ask an AI for realism, you are requesting accuracy: clear lighting, sharp textures, correct proportions, and colors that reflect natural physics. To guide the machine toward realism, you must use descriptors like “photorealistic,” “natural lighting,” or “high detail.” The AI responds by pulling from patterns that resemble what a camera would see. In a sense, realism is the most literal visual language, a direct translation of the world into pixels.

Anime and the Art of Simplified Expression

Anime communicates emotion through simplicity and exaggeration. Large eyes, expressive poses, and bold outlines give the style its charm. When instructing an AI to mimic anime, you must emphasize the shorthand the style relies on: “smooth shading,” “stylized proportions,” “large reflective eyes,” or “thin ink outlines.” These cues help the AI shift from the physics-driven accuracy of realism to the symbolic storytelling of Japanese animation. It becomes less about capturing the world as it is and more about capturing the feeling behind it.

Sketch and Line Art: The Beauty of Suggestion

A sketch speaks in hints rather than statements. A few lines, a shadow here, a gesture there—the viewer fills in the rest. To coax this language from an AI, you must guide it with descriptors like “rough pencil lines,” “unfinished texture,” “monochrome,” or “hand-drawn style.” Line art is even more minimal, relying on clean contours and negative space. These styles remind us that images do not need to be full to be complete; sometimes suggestion is the most powerful form of communication.

Watercolor and the Softness of Fluid Color

Watercolor is a dance between pigment and water, something technology never captured easily in my time. When requesting watercolor from an AI, you must emphasize softness and flow: “bleeding edges,” “translucent washes,” and “soft gradients.” The system will then shift from sharp definition to gentle diffusion, blending colors into one another as if they were brushed across textured paper. This is one of the rare styles where imperfection is the goal—where the charm lies in unpredictability.

Surrealism and the Logic of Dreams

Surrealism speaks in contradictions, bending reality into impossible forms. To generate a surreal image, the AI needs prompts that combine ordinary elements in strange or symbolic ways: “floating objects,” “distorted landscapes,” or “impossible architecture.” Surrealism is an invitation for the machine to step beyond the boundaries of realism, guided not by accuracy but by imagination. It teaches the AI to break rules intentionally, something machines rarely do without being told.

Historical Illustration and the Echoes of the Past

Historical illustrations have distinct visual fingerprints, shaped by the tools and limitations of their time. Woodcuts, medieval manuscripts, Renaissance portraits—each carries texture, line quality, and color palettes specific to their era. When guiding the AI, descriptors like “engraving-style lines,” “aged parchment tones,” or “Renaissance oil painting texture” help it replicate the constraints of old techniques. In doing so, the machine recreates the look and feel of images crafted long before digital sensors existed.

Blueprints and Scientific Diagrams: Precision Over Flourish

Blueprints and diagrams speak the language of clarity and measurement. They prioritize structure, hierarchy, and information. To create them, you must ask the AI for “technical line work,” “schematic-style labels,” or “isometric structure.” The machine then shifts into a mode where accuracy is more important than artistry. These styles reveal that images are not always created to be admired—sometimes they are created to instruct.

Teaching the AI to Change Its Visual Voice

The power of AI image generation lies in how easily it can change its “voice.” With a single prompt, it can move from stiff scientific diagrams to vivid surrealism. But this shift only happens when you understand the visual language you’re asking it to speak. Each style is a set of rules—some strict, some loose—that the AI can follow if you provide the right guidance. As with any language, clarity leads to mastery. The better you define the style you want, the more fluently the machine creates in that artistic dialect.

Why Style Choice Shapes the Entire Image

Choosing a style is more than choosing a look. It determines the emotional tone, the storytelling approach, and even the level of detail the AI uses. Style is the lens through which your idea becomes visible. Understanding this gives you control, not just over what the AI creates, but how it communicates. And in a world where images can now be generated in seconds, that control is more important than ever.

Designing for Purpose: Posters, Logos, Icons, and Infographics – Told by Kirsch

When I created the first digital image, I wasn’t thinking about posters or logos or educational graphics. But I did understand one thing clearly: every image exists for a purpose. Today, purpose matters more than ever, because AI image systems can create thousands of visuals in seconds. The challenge is not producing the image—it is guiding the machine to produce the right image. That guidance begins with writing prompts tailored to the specific deliverable you need, whether it’s a striking poster, a clean icon, or a detailed character portrait. Without knowing the purpose, the AI cannot know the design.

Writing Prompts for Posters and Social Graphics

Posters must communicate bold, clear ideas. When generating posters with AI, your prompt should focus on layout, hierarchy, and emotion. Instead of simply describing the subject, guide the AI with instructions such as “strong centered composition,” “high-contrast colors,” or “large bold title text space at the top.” Social media graphics need similar clarity but often in square or vertical formats. The more specific you are about orientation, mood, and focal point, the stronger the final image becomes. Remember, posters and social graphics are meant to grab attention—your prompt should reflect that intention.

Creating Logos with Simple, Focused Language

Logos are the opposite of posters—they rely on simplicity rather than complexity. When asking AI to design a logo, your prompt should emphasize minimalism, clean lines, and strong shapes. Phrases such as “simple geometric symbol,” “flat vector style,” or “one or two colors only” help the machine avoid clutter. You must also describe the personality of the brand: playful, serious, elegant, futuristic. Without emotional direction, the AI may fill the logo with unnecessary decoration. Logos succeed by being memorable, and that begins with a prompt that eliminates excess.

Designing Icons for Clarity and Consistency

Icons must communicate instantly. They work in tiny spaces, and the smallest detail can make them unreadable. Your prompt should specify “minimal detail,” “high contrast,” and “consistent style across multiple icons.” If you need a full icon set, explain that they must match: “set of eight icons in the same line-art style.” The AI responds best when you give constraints, because icons are not imaginative illustrations—they are symbolic tools meant to guide the user’s eye in predictable ways.

Building Infographics and Educational Graphics

Infographics rely on information hierarchy, not artistic flair. Your prompt should guide the AI to include labeled sections, clear shapes, and structured layouts. Words like “organized,” “diagram-style,” “labeled components,” or “clean infographic layout with sections for text” tell the machine exactly how to structure the content. Educational graphics require similar precision. For worksheets or diagrams, prompts should specify “space for student writing,” “simplified visuals,” or “clear labels without clutter.” When the deliverable is meant for learning, clarity becomes the primary goal.

Creating Box Art, Game Assets, and Character Portraits

Box art needs drama, energy, and a strong central focus. Prompts should include stylistic direction such as “dynamic action scene,” “bold lighting,” or “highly detailed fantasy illustration for game box.” Game assets require an entirely different approach—clean edges, consistent colors, and a specific angle or perspective. Phrases like “isometric game asset,” “transparent background,” or “uniform style for multiple items” help the AI maintain visual cohesion. Character portraits depend on personality and detail; prompts should describe emotion, pose, clothing, and style with precision. Each of these deliverables speaks its own visual language, and your prompts must match it.

Choosing Words That Match the Intended Use

The biggest mistake people make when writing prompts is treating all images the same. A prompt for an infographic cannot be written like a prompt for a fantasy painting. A prompt for a logo cannot be written like a prompt for a character portrait. The design’s purpose determines the structure of the prompt. When you understand the purpose, you understand what details matter, what to emphasize, and what to exclude. Purpose creates direction, and direction is what allows the AI to produce meaningful work.

Turning Your Vision into a Precise Instruction

Good prompts are not long—they are intentional. They describe not only what should appear in an image, but how the image should function. This approach transforms your words from simple descriptions into design instructions. When you guide the AI with clarity, the result is not just an image—it is a deliverable that fits the need it was created for. And that is the heart of design: not decoration, but communication.

Character & Consistency Management

When I work with storybooks, games, or curriculum guides, I quickly learn that a character is more than a collection of shapes and colors. A character becomes a companion to the reader, a guide through the material, or even the face of an entire project. But for that relationship to form, the character must remain recognizable. If their hair changes shape between pages, their clothes shift color from chapter to chapter, or their personality feels different from one illustration to the next, the spell breaks. Consistency is what makes a character feel real. And in the world of AI image generation, consistency becomes a skill you must intentionally design into your prompts.

Defining the Core of the Character

Before I generate a single image, I start with what I call the character core. This is the set of traits that will never change: hairstyle, eye shape, height, clothing style, signature colors, and even personality cues—not in text, but in visual form. These core details become the foundation of every prompt I write afterward. When I describe a character, I always use the same descriptors in the same order. This repetition teaches the AI that these traits belong together. Consistency begins with discipline, and the words we choose become the anchor that holds the character in place.

Using a Base Reference Image

A single strong reference image can do more for consistency than a hundred lines of text. When I have the exact look I want—a face, a pose, a uniform—I use that image as a visual anchor. AI models respond powerfully to references, because images reduce ambiguity. When the reference is included repeatedly across prompts, the AI learns what “this character” means. Even if I request them in different outfits, lighting conditions, or environments, the underlying facial structure and proportions remain stable. I think of it as the master sketch that every other illustration must echo.

Building a Prompt Pattern for Different Scenes

A character rarely stays in the same place or angle, so I design prompt templates for different situations: standing poses, emotional expressions, action scenes, teaching scenes, or portrait-style images. Each version contains the same character core, followed by modifiers for the specific scenario. This creates a predictable pattern that helps the AI understand when to hold details constant and when to change them. Over time, these templates become a library I can reuse for any project, ensuring my character feels like themselves no matter what world they’re standing in.

Controlling Style for Visual Unity

A consistent character means nothing if the style shifts from image to image. I make sure every prompt includes a style declaration—whether that’s line art, watercolor, digital painting, flat vector, or realistic shading. Style determines the visual language, and even a perfectly repeated character can feel inconsistent if they appear differently rendered. By keeping the style command stable, the AI maintains not only the character’s identity, but the entire project’s tone.

Avoiding Overloading the Prompt

One common mistake in character design prompts is overspecifying. Too many details can accidentally pull the AI away from the core concept. I’ve learned to focus on the essentials: a few fixed features, a defined style, and a clear scene. The rest unfolds naturally when the AI has a solid foundation to work from. Prompts meant for consistency should be clear, simple, and focused—not a paragraph of competing ideas.

Iterating and Refining Through Comparison

After generating several images, I line them up and compare them side by side. Small changes—a nose that’s too long, a shift in hair texture, a slight difference in jawline—can easily snowball into major inconsistencies. When I spot these shifts, I refine the prompt or reinforce the reference image again. Consistency through AI is not a one-step process; it’s a loop of creation, comparison, and correction. This is how long-term characters become reliable, expressive, and familiar.

Building a Character That Lasts

When a character is consistent, they become more than an illustration—they become a companion the audience trusts. Whether they appear in a game, a comic, a curriculum guide, or a monthly adventure box, their familiar face ties the entire project together. AI can help create them faster than ever before, but it’s our responsibility to guide the machine with clarity and purpose. Consistency is not accidental. It’s crafted. And when done well, it allows your stories and lessons to come alive through a character who feels like they’ve been with your readers from the very beginning.

Ethical Image Creation

When I work with AI image generation, I often remind students that every tool we use reflects our character. A hammer can build a home or break a window. A camera can document truth or twist it. And AI, with its ability to create powerful and convincing images, tests our intentions more clearly than almost anything else. The images we choose to create reveal who we are. If we use AI to build beauty, it shows our love for creativity. If we use it to help others, it shows generosity. But if we use it to deceive or harm, it shows a side of ourselves we must not ignore. AI is a mirror, and ethical image creation begins with understanding what it reflects.

Accuracy and the Truth Behind the Image

Accuracy is one of the first ethical pillars in visual creation. When I generate an image meant to represent a historical figure, scientific concept, or cultural tradition, I owe it to my audience to depict it truthfully. AI can easily invent details that never existed—a Roman soldier with armor from the wrong century, an Indigenous leader wearing symbols from the wrong tribe, or a scientific diagram that misrepresents how something functions. When we create images for learning, we must take the extra step to verify accuracy. The goal is not just to create something attractive, but something trustworthy. Any tool that shapes how people see the world must be used with respect for the truth.

Avoiding Harmful Stereotypes

AI models learn from millions of images, but those images often carry bias. If we are not careful, our prompts can unintentionally recreate harmful stereotypes about race, culture, gender, religion, or disability. A prompt like “a professional person” may produce a certain type of face repeatedly. A prompt like “a criminal” may show biased patterns in appearance. The responsibility is on us to notice these patterns and break them. By choosing our words intentionally and reviewing output critically, we guide AI away from hurtful portrayals. Ethical image creation means recognizing the power images hold and refusing to repeat the mistakes of the past.

Respecting Likeness Rights

Just because AI can imitate a person’s face doesn’t mean it should. Whether it’s a celebrity, a family member, a historical figure, or someone from a news story, likeness carries dignity. People deserve to control how they are portrayed. When I generate images, I ask myself: Does this person approve? Is this respectful? Am I using their identity for education, imagination, or exploitation? Even with historical figures, I aim to portray them fairly, not turning them into caricatures or using their image in ways that distort their legacy. Respecting likeness is respecting humanity.

Avoiding Deepfake Misuse

One of the greatest ethical dangers in image generation is deepfake misuse—the creation of images meant to deceive or manipulate. A single artificial picture can damage reputations, mislead the public, or spread fear. This is where character truly reveals itself. The question is simple: Am I using AI to tell the truth or to distort it? For students, educators, and creators, the answer must always lean toward honesty. Ethical image creation requires never using AI to pretend something happened when it did not, or to create false evidence that harms real people.

Authenticity in Historical Recreations

When we bring the past to life through AI-generated images, we walk a delicate line. We want to help students visualize ancient worlds, but we must not rewrite history through inaccuracy or romanticization. Authenticity means choosing clothing appropriate to the era, portraying people with dignity, and avoiding visuals that oversimplify complex cultures. I remind myself that history is not a playground but a record of real lives. AI can illuminate that record, but only if we guide it with care and humility.

The heart of ethical image creation is understanding that AI does not have morality—only users do. Its images are shaped by our intentions, our biases, our curiosity, and our choices. When we sit at the keyboard to generate an image, we decide what kind of creator we want to be. Do we use AI to uplift, educate, and inspire? Or do we use it to mislead, mock, or manipulate? Every image becomes a reflection of our inner selves. In the end, AI is not here to replace human creativity—it is here to reveal it. And how we choose to use it tells the world exactly who we are.

Copyright, Licensing, and Commercial Use

When people think about AI image generation, they often picture the creativity, the speed, and the endless possibilities. But behind every image—especially those used in schools, products, or businesses—there are rules that protect creators, artists, and educators. Learning these rules isn’t about limiting creativity. It’s about respecting the work of others, ensuring that what we build is honest, and protecting ourselves from future legal problems. Copyright, licensing, and commercial use form the foundation of ethical digital creation, and understanding them is essential for every educator, student, and storyteller.

What “Commercial Safe” Really Means

Commercial safe is a term many AI companies now use to describe models or images that can legally be used in projects meant to be sold. This includes books, posters, curriculum guides, merchandise, apps, game assets, and more. When something is commercial safe, it means the AI model was trained on images the company has legal rights to use—either public domain materials, licensed images, or artwork created specifically for training. For educators, this offers peace of mind. If the tool guarantees commercial safety, you can confidently use the images in your classroom materials or sell educational products without worrying about copyright infringement.

Understanding Rights-Protected Content

Rights-protected content refers to images, artwork, or creative works that still belong to their creators. If an AI model was trained on rights-protected material without permission, it raises serious ethical and legal concerns. Some AI tools scrape the internet for images—pulling in copyrighted artworks, photographs, brand logos, or illustrations without the artists’ consent. This has led to accusations that these tools are copying styles or reproducing recognizable characters. For educators, this means extra caution. If a model uses rights-protected training data and you create something that resembles a real artist’s work, you could unknowingly be walking into copyright trouble. The safest path is choosing models that disclose how they were trained and avoid unlicensed scraping.

What Training Transparency Means

Training transparency is the promise—or the requirement—that AI companies disclose what kinds of images and data their models learned from. Some companies openly share everything: the sources, datasets, and licensing agreements. Others refuse to reveal their training materials, claiming it is a trade secret. For educators, transparency is vital. If you know the model was trained ethically, you can feel comfortable using its outputs in your classroom or resources. But if the company avoids answering questions about its training data, you must assume there is risk. Transparency gives you trust. Without it, you rely on guesswork.

Open vs. Closed Models and Their Risks

Open models, such as open-source tools, give users control—they can be downloaded, customized, trained, or modified. But openness also means the training data may be unclear. Some open models were trained on massive public datasets scraped from the web, which likely include copyrighted or private materials. Closed models, like those from major companies, do not allow you to see the internal code, but may offer stronger commercial protections, clearer licensing agreements, or curated training data. For educators, the best choice depends on the intended use. If you're making materials for students only, open models can be helpful. But if you create resources to sell or distribute widely, closed commercial-safe models provide much greater legal protection.

Court Cases Shaping the Future of AI and Copyright

Right now, the United States legal system is wrestling with major questions surrounding AI training and copyright. Artists have sued several AI companies, claiming their copyrighted artwork was used without permission to train models. Musicians and authors have made similar accusations—arguing that AI tools learned from their creative works and can now generate pieces in their style. These court cases will determine whether training on copyrighted material without consent counts as fair use or infringement. They will also decide whether AI companies must remove certain data, pay artists, or redesign their systems entirely. For educators, the outcome will shape what tools are safe to use and how much responsibility falls on the creators of AI systems.

How Educators Can Use AI Images Safely

Even with uncertainties in the legal world, educators can take simple steps to stay safe and ethical. Choose tools that offer commercial-safe labels or clear training transparency. Avoid generating images of real celebrities, copyrighted characters, or distinctive artwork styles that belong to modern artists. Keep your prompts original—focus on ideas, not replications of existing media. And always think about whether your image respects creators, students, and the truth. When you take this approach, you protect both yourself and your audience, all while modeling integrity for the students you teach.

At the heart of the matter is a simple truth: how we use our tools shows who we are. If we use AI to create beautiful, helpful images that contribute to learning, it shows our generosity and creativity. If we use it to imitate artists without credit, or to create deceptive visuals, it reveals something very different. AI is a mirror of our choices. Ethical image creation, licensing awareness, and respect for rights are not burdens—they are chances to demonstrate integrity. And when we choose the right path, we prove that our ideas and creations come not from shortcuts, but from our commitment to doing good in a digital world that needs responsible guides.

Limitations and Pitfalls of AI Art

When students first discover AI art, the excitement is undeniable. It feels like magic—type a few words, and suddenly a vivid painting or dazzling scene appears before you. But behind that magic are weaknesses that every creator must understand. AI does not think the way we do, nor does it truly see the world. It imitates patterns, guesses at connections, and sometimes invents things that never existed. If we trust AI blindly, especially in educational work, we risk passing along errors that spread confusion instead of clarity. Understanding these pitfalls is the only way to use AI responsibly and confidently.

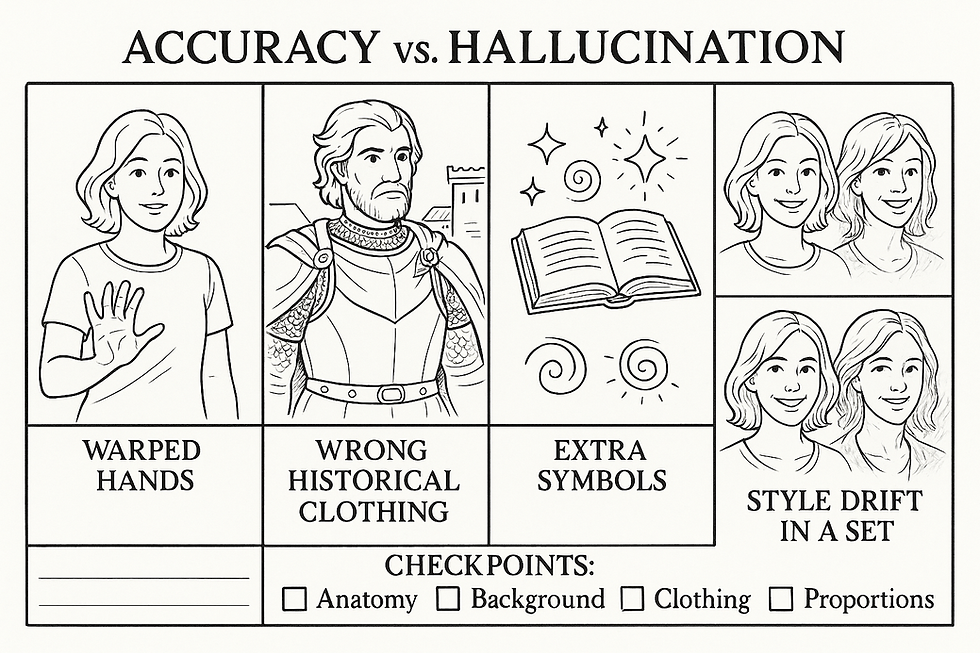

Hallucinated Details and Invented Information

AI models are extraordinary at producing convincing imagery, but sometimes too convincing. They often add details that were never asked for—extra symbols on clothing, fictional writing on signs, invented architectural features, or imaginary objects hidden in backgrounds. These hallucinated elements may look intentional, but they are simply the model filling gaps in its understanding. In historical illustrations, this can be particularly dangerous, making a scene appear authentic when it includes completely fabricated cultural elements. As creators, we must examine every image closely and remove or correct the AI’s inventions before presenting them to a student or audience.

Warped Anatomy and the Limits of Visual Logic

One of the most common issues with AI art is anatomy. Hands with too many fingers, eyes that don’t align, limbs bending the wrong direction, expressions that feel unnatural—these flaws happen because AI does not comprehend the structure of the human body. It recognizes patterns of bodies from many images, but it doesn’t understand bones, joints, or balance. When creating characters, portraits, or educational illustrations, these distortions can break immersion or confuse learners. I always recommend scanning the image as if you’re a sculptor: checking proportions, posture, and symmetry. When something feels “off,” it usually is, and editing becomes essential.

Style Drift and Unpredictable Variations

Even with a consistent prompt, AI has a habit of drifting from the intended style. One image may look clean and simple, while the next becomes overly textured or dramatic. Sometimes the AI shifts from watercolor to digital painting without instruction, or it adds shading even when you asked for flat colors. Style drift is one of AI’s biggest challenges, because it breaks visual continuity in storybooks, curriculum sets, or game assets. The only solution is consistency in your prompts, careful review, and often multiple attempts until the style stabilizes. Human oversight maintains the visual unity that AI cannot guarantee.

Incorrect Historical Costuming and Misleading Details

AI models are trained on millions of images, but not all of them are historically accurate. When you request a Roman general, a medieval queen, or an ancient Egyptian priest, the AI may mix clothing styles from different eras or invent new ones entirely. A Viking might appear with fantasy armor, or an early American settler might wear buckles that did not exist at the time. These inaccuracies may seem small, but in educational materials they misrepresent real cultures, timelines, and traditions. When creating historical illustrations, creators must verify every visible detail—clothing, tools, hairstyles, and setting—against reliable sources. AI can assist, but it should never replace research.

Why Human Revision Is the Final, Essential Step

AI can generate concepts at lightning speed, but it cannot guarantee truth, coherence, or reliability. That responsibility belongs to us. Every image must be reviewed with a human eye: checking for accuracy, correcting distortions, aligning style, and ensuring the final product reflects the real world—or the fictional one—faithfully. Human revision brings intention back into the artwork, transforming AI’s rough ideas into polished deliverables. Without revision, the mistakes slip through, and the magic becomes misinformation.

Using AI Carefully in Educational Content

In education, accuracy is sacred. A single incorrect detail may confuse a student or shape their understanding of history, science, or culture for years to come. AI art can enrich learning and bring subjects to life, but only when carefully guided by creators who know its limits. We must approach every image with curiosity, skepticism, and craftsmanship. The goal is not to avoid AI, but to use it wisely—turning a tool known for its flaws into one that helps us teach with clarity, beauty, and truth.

In the end, AI art is a powerful assistant, not an artist. It provides raw material, but we provide meaning. When we understand its pitfalls—hallucinated details, warped anatomy, drifting styles, and inaccurate history—we can anticipate its weaknesses and correct them. Human judgment transforms AI’s imperfections into opportunities for better work. And when we approach AI art with care and intention, we ensure our projects remain not only creative, but trustworthy.

Human–AI Collaboration in Visual Design – Told by Russell A. Kirsch, Willard S. Boyle, and Zack Edwards

When the three of us sit down to talk—Russell with the spark of early digital imagination, Willard with the precision of sensor-driven light, and me with the modern tools of generative AI—we do so not as three experts from different eras, but as three creators looking at the same evolving challenge. How do humans and machines work together in visual design without losing clarity, truth, or meaning? Our conversation becomes a bridge between past innovation and present opportunity. And as we begin, we quickly discover that while technology has transformed dramatically, the need for thoughtful human direction has stayed exactly the same.

Discussing What AI Does Well

Russell leans forward first, amused by how fast modern tools produce drafts. He says that what once required hours of scanning, coding, and rendering now appears almost instantly from a single prompt. “The speed is astonishing,” he admits. “AI is unmatched in generating variations—dozens of concepts, styles, or angles in moments.” Willard adds that AI shines in experimentation: “If you want to see an idea from five perspectives, the machine delivers them without complaint.” I agree, pointing out that AI excels at the first step—the messy brainstorming phase where most creators get stuck. AI fills the page with possibilities so the human doesn’t have to fear the blank canvas. Together, we conclude that AI is a powerful drafting partner precisely because it does not hesitate, doubt, or slow down.

Identifying Where AI Still Struggles

But Willard quickly points out that speed does not equal accuracy. “A camera sensor captures reality; an AI produces an interpretation of patterns,” he says. Russell nods, adding that these interpretations often warp reality without warning. Hands bend strangely, clothing belongs to the wrong century, and symbols appear that no one requested. AI may understand likeness, but it does not understand truth. I explain that this is where students and creators must be careful: AI’s confidence can be misleading. It delivers beautiful errors—believable but incorrect. Without human revision, the results may confuse, distort, or misrepresent the subject. And thus we agree: AI drafts, but humans must handle accuracy.

Refining Composition With Human Intention

Russell notes that composition is not something a machine “understands.” It recognizes statistical patterns—where objects usually appear—but it cannot determine what matters most in a design. Willard adds that composition is the language of intention, something only human minds can fully grasp. Should the viewer’s eye land on the character? The title? The symbol in the corner? AI cannot decide. It often fills the frame with unnecessary clutter or misplaces focal points. I describe how I review AI drafts like a sculptor: trimming elements, moving shapes, simplifying backgrounds, and directing the viewer’s attention where the message truly lives. Together, we agree that composition is a human responsibility, because only a human can decide what the image is trying to say.

Why Typography Requires Human Hands

Typography becomes our next topic, and this time all three of us laugh. “AI still cannot draw proper letters,” Willard grins. Russell shakes his head, amused that after decades of advancing digital imagery, the machine still fails at consistent text. I add that typography is not only about legibility—it’s about personality, harmony, and voice. Whether designing posters or educational worksheets, humans must refine the text: choosing fonts, adjusting spacing, aligning content, and creating hierarchy. AI may provide a layout suggestion, but it cannot polish letterforms with the nuance that communication demands. Typography, we agree, is where human craftsmanship is still essential.

Enhancing Accuracy Through Human Review

Willard brings back the importance of accuracy, especially for educational content. AI often blends historical eras, invents artifacts, or misinterprets cultural symbols. Russell points out that such mistakes are dangerous because they feel convincing. A student might see an AI-generated image of ancient Egypt with fantasy jewelry or modern tools—and assume it is historically correct. My role becomes clear: to teach creators how to inspect every detail, compare with references, and correct AI hallucinations. The machine drafts; the human verifies. That verification is the difference between education and misinformation.

Polishing the Final Vision Together

As the conversation continues, we realize the final polish is the stage that most clearly reveals human creativity. AI can generate raw material, but it lacks the emotional intelligence to refine it. Humans adjust lighting for mood, select colors for symbolism, and remove visual noise to improve clarity. “The artist’s fingerprint,” Russell calls it. “The final pass where intention outweighs probability.” It is here that the partnership between humans and AI reaches its strongest form: the machine provides the clay, and the human shapes it into meaning.

Creating a Workflow That Mixes Strengths

Together, we outline a simple but powerful workflow:AI drafts ideas → Human selects purpose → AI generates variations → Human corrects errors → AI produces refined drafts → Human finalizes composition, typography, and accuracy → Finished design emerges.Russell calls it “shared imagination.” Willard calls it “guided light.” I call it “co-creation.”

Recognizing That Better Collaboration Comes From Better Prompts

Before concluding, we discuss how humans can actively improve AI’s performance. Clear prompts reduce errors. Reference images improve consistency. Style locks prevent drift. And careful iteration teaches students how to think like designers with precision. The better the instructions, the better the partnership. AI becomes less chaotic and more predictable—not because it improves internally, but because the human guiding it becomes more skilled.

A Partnership Built on Strengths, Not Substitutions

In the end, our three voices agree: AI is not here to replace human designers. It is here to work beside them. It drafts with speed, but humans refine with meaning. It imagines broadly, but humans choose wisely. It generates possibilities, but humans create purpose. When used well, AI becomes a tool that amplifies creativity rather than replacing it. And the quality of the collaboration depends entirely on the intentions, ethics, and skill of the human who holds the tool.

Vocabular to Learn While Learning About AI Image Generation

1. Diffusion Model

Definition: A type of AI system that creates images by starting with random noise and slowly shaping it into a picture using learned patterns.Sentence: The diffusion model transformed my simple prompt about a dragon into a detailed and colorful illustration.

2. Embeddings

Definition: Numerical representations of words or ideas that help AI understand meaning.Sentence: The AI used embeddings to figure out that “happy dog” should look different from “scared dog.”

3. Style Conditioning

Definition: A method of guiding AI to create images in a specific artistic style.Sentence: By adding style conditioning to my prompt, the AI generated the scene in a watercolor style.

4. Reference Image

Definition: A picture provided to the AI to guide or influence its output.Sentence: I uploaded a reference image so the AI would keep the character’s face consistent.

5. Composition

Definition: The arrangement of elements within a visual design.Sentence: The composition of the poster made the title easy to read and the main image stand out.

6. Typography

Definition: The art and technique of arranging text in a visually appealing way.Sentence: Good typography helped the infographic look clean and easy to understand.

7. Iconography

Definition: The use of simple images or symbols to convey meaning.Sentence: The iconography in the app helped users quickly identify settings, messages, and notifications.

8. Vector Graphics

Definition: Images made of lines and shapes that can be resized without losing quality.Sentence: The logo was created as vector graphics so it could be printed as a tiny sticker or a giant banner.

9. Style Drift

Definition: When AI-generated images slowly shift away from the intended artistic style.Sentence: I noticed style drift in the character set when one picture looked realistic and the next looked cartoonish.

10. Licensing

Definition: Legal permission to use an image, design, or creative work.Sentence: Before using the artwork in a school project, we checked its licensing to make sure it was allowed.

Activities to Demonstrate While Learning About AI Image Generation

Build Your First AI Character – Recommended: Beginner to Advanced StudentsActivity Description: Students create a simple character using an AI image generator, then refine the prompt to improve consistency and clarity.

Objective: Teach students how prompts affect the AI’s interpretation and results.

Materials:• AI image generator (DALL·E, ChatGPT, or Midjourney)• Computers or tablets• Paper for sketching• Optional: Colored pencils or markers

Instructions:

Have each student sketch a basic character on paper (a robot, animal, explorer, etc.).

Students write a simple prompt describing the character.

They input the prompt into an AI image tool and generate an image.

Students review the results and refine their prompts, adding descriptors like colors, clothing, and emotion.

They generate a second image and compare the differences.

A short reflection: “Which words changed the image the most?”

Learning Outcome: Students learn how descriptive language shapes visual outputs and how AI interprets prompts. They also understand the basics of character design and consistency.

Style Exploration Gallery Walk – Recommended: Intermediate to Advanced StudentsActivity Description: Students experiment with generating the same subject in different artistic styles, then share their results in a classroom “gallery walk.”

Objective: Help students understand style conditioning and how visual language shifts across art styles.

Materials:• AI image generator• Printed or digital reference images• Poster board or digital slide templates• Sticky notes for peer feedback

Instructions:

Choose one subject (a treehouse, spaceship, cat, or historical figure).

Students generate images in multiple styles: watercolor, anime, realism, line art, blueprint, surrealism, etc.

Each student prints or displays their variations in a gallery format.

Students walk around viewing others’ galleries, leaving sticky note comments identifying stylistic elements.

Have a class discussion on which styles changed the mood or focus the most.

Learning Outcome: Students recognize how artistic styles influence tone and purpose while also learning to analyze and discuss visual aesthetics.

Historical Accuracy Challenge – Recommended: Intermediate to Advanced StudentsActivity Description: Students use AI to generate images of a historical figure or event—then research to fact-check and correct inaccuracies.

Objective: Teach students to identify AI hallucinations, incorrect costuming, or anachronisms and revise prompts for accuracy.

Materials:• AI image generator• Reliable history sources: books, websites, or museum archives• Worksheet for noting inaccuracies

Instructions:

Assign a historical figure (e.g., Cleopatra, George Washington, Mansa Musa).

Students create an AI-generated portrait or scene based on a basic prompt.

Students research to verify clothing, tools, environment, and cultural details.

They list inaccuracies in the AI image (armor wrong, hairstyle incorrect, added fantasy elements).

They revise their prompt with accurate descriptors and generate a corrected version.

Students present the “before” and “after” images.

Learning Outcome: Students learn to question AI outputs, spot inaccuracies, and understand why human revision is essential—especially in educational content.

Create a Consistent Character Comic Strip – Recommended: Beginner to Advanced StudentsActivity Description: Students generate a short comic strip using AI-generated character art that remains visually consistent across panels.

Objective: Teach consistency management and the importance of repeated descriptors and reference images.

Materials:• AI image generator that accepts reference images• Comic strip template• Computer or tablet

Instructions:

Students design a simple character and generate a base image.

They save the base reference and use it for every panel.

Students draft a short 3–6 panel story.

They generate images for each scene, using identical descriptors for the character’s features.

Students assemble the final comic strip and evaluate consistency.

They identify which prompt changes improved consistency.

Learning Outcome: Students practice character continuity and learn how prompt precision affects stability in AI-generated designs.

Comments