Chapter 3: The Ethics and Laws of AI

- Zack Edwards

- Nov 6

- 28 min read

Understanding AI Ethics: Why It Matters

Artificial Intelligence has the power to change everything—from how we learn and work to how we make decisions that affect lives. But with great power comes the need for great responsibility. AI is not evil, nor is it inherently good; it is a reflection of the person who uses it. The moral principles that guide its design must come from us—our sense of fairness, truth, compassion, and respect for others. These values must be built into every algorithm, every dataset, and every decision we allow a machine to make.

Balancing Innovation with ResponsibilityI’ve seen many students and professionals become fascinated by what AI can do—and rightly so. It can write, design, analyze, and even solve problems faster than any person could alone. But speed without understanding is dangerous. We must remember that innovation means nothing if it sacrifices integrity. Just because we can create something powerful doesn’t mean we should unleash it without caution. True innovation works in harmony with responsibility, ensuring that progress serves humanity, not the other way around.

Learning from Success and FailureThroughout history, technology has given us both great triumphs and deep mistakes. In healthcare, for example, AI has saved lives by detecting diseases early, but biased medical data has also led to misdiagnoses that harmed patients of certain races or backgrounds. Facial recognition systems have helped solve crimes, but they’ve also wrongly accused innocent people. These examples remind us that ethical design isn’t optional—it’s essential. Every success or failure in AI becomes a lesson about how human values must stay at the center of technological progress.

Why Using AI Wisely MattersThere is a temptation to let AI do everything for us—to write our essays, solve our math problems, or make choices we’d rather avoid. But every time we let a machine think for us, we lose a little bit of what makes us human: our creativity, problem-solving, and perseverance. AI should be a tool to make us more efficient and effective, not to replace our efforts. If you stop exercising your own mind, you’ll find it harder to think independently, to reason deeply, or to solve problems without help.

Becoming a Responsible CreatorUnderstanding AI ethics means realizing that you have power. Every prompt you write, every program you design, and every dataset you train is a chance to shape something that can affect the world. You must ask yourself not only “Can I do this?” but “Should I?” When used responsibly, AI can extend human potential and creativity in extraordinary ways. But when used carelessly, it can erode the very skills and values that define us.

My Name is Ada Lovelace: The First Computer Programmer

I was born in 1815, the daughter of two vastly different worlds—my father, Lord Byron, the fiery poet, and my mother, Anne Isabella Milbanke, a woman of sharp intellect and numbers. My mother feared I might inherit my father’s wild temperament, so she guided me toward the safety of mathematics and science. Numbers became my poetry, equations my verses. I found comfort in logic, yet my imagination always danced within it.

Meeting Charles Babbage and the Machine of DreamsMy life changed the day I met Charles Babbage, a man whose mind burned with invention. He showed me his “Difference Engine,” a vast contraption of gears and levers designed to calculate numbers. It was a marvel, but it was his next dream—the “Analytical Engine”—that truly captured my soul. I saw not just a machine of calculation, but a machine of creation. I studied its every diagram and note, translating and expanding upon them until they sang with new meaning.

The Notes That Changed the FutureWhen I translated an Italian paper on Babbage’s Analytical Engine, I added my own ideas—notes that grew longer than the original work. In them, I described how the machine might not only process numbers but also symbols, words, or music, if given proper instructions. It could, in theory, weave patterns of logic as the Jacquard loom wove patterns of silk. These “Notes by the Translator” became the foundation of what future generations would call computer programming.

My Vision of a Thinking MachineI did not believe the machine could “think” as humans do; rather, it could mirror the logic we feed into it. I wrote that the Analytical Engine “has no pretensions whatever to originate anything. It can do whatever we know how to order it to perform.” I understood, even then, that the true power of machines lay not in their mechanics, but in the minds that designed and instructed them. That was both a promise and a warning—the potential for beauty or for folly rested with us.

The Legacy of Imagination and ReasonThough I did not live to see my vision realized, I believed the marriage of imagination and mathematics would one day transform the world. I saw beyond the brass and gears into a future of infinite computation—a future where machines could amplify human thought, where creativity would merge with logic to achieve wonders undreamed of in my time.

Bias in AI: How It Starts and How to Fix It – Told by Ada Lovelace

When I once imagined a machine capable of following instructions and calculating patterns, I believed it would only ever reflect the thoughts and intentions of its creator. That truth still stands. Every line of code, every dataset, every algorithm carries the mark of the human hand that built it. Artificial Intelligence learns from the information we feed it—information shaped by our culture, history, and perception. If the data is biased, the machine will echo that bias. It cannot correct what it does not know is wrong.

How Bias BeginsBias often starts before the first line of code is written. It hides in the data we collect—the faces we photograph, the words we record, the patterns we decide are “normal.” If a dataset includes more examples of one group of people than another, the machine will learn to favor that group. A facial recognition system trained mostly on lighter skin tones may struggle to recognize darker ones. A hiring algorithm trained on years of company records may inherit the same gender or racial imbalances already present in human decisions. Bias begins quietly, in the numbers, long before a machine ever speaks.

When Bias Becomes VisibleModern controversies make this truth painfully clear. One such example came from Google’s Gemini program, where attempts to correct historical imbalance in generated images led to unintended results. When asked to create pictures of certain historical figures or groups, the system produced unrealistic or anachronistic images—such as a depiction of George Washington as Black. This was not an act of malice, but a symptom of programming shaped by human political ideals and the complexities of diversity training data. When fairness is forced without balance, the result can appear artificial or even misleading. The challenge lies not in erasing history or replacing it, but in representing it truthfully and inclusively.

The Hidden Bias of the ProgrammerEvery programmer carries unseen beliefs—moral, political, or cultural—that can influence how they design systems. The values chosen for a machine’s goals or constraints are not neutral; they reflect the creator’s worldview. Whether through the words selected for a language model or the filters applied to search results, bias can slip through human hands unnoticed. Even the decision of what data to include, or exclude, reveals a kind of judgment. In this way, the machine does not simply reflect the world—it reflects the people who shape it.

Fixing What We BuildTo correct bias, one must begin with awareness. Bias mitigation starts with balanced data collection—gathering examples from many groups and perspectives so the machine can learn from a broader truth. It requires auditing algorithms to see how they perform across different groups and making adjustments when unfair patterns emerge. Transparency is equally vital: we must know how an AI system was trained, what data it used, and what assumptions guided its design. In short, machines must be taught to see the world more fully than any one of us can.

A Call for Responsible CreationBias will never vanish entirely, for it begins in human imperfection. But we can build systems that strive for fairness, that question their own limitations, and that learn to improve. When we treat Artificial Intelligence as a partner in progress rather than a perfect authority, we preserve both innovation and integrity. The machine’s greatest potential lies not in replacing human judgment, but in reminding us to examine our own.

Fairness and Accountability in Machine Learning – Told by Ada Lovelace

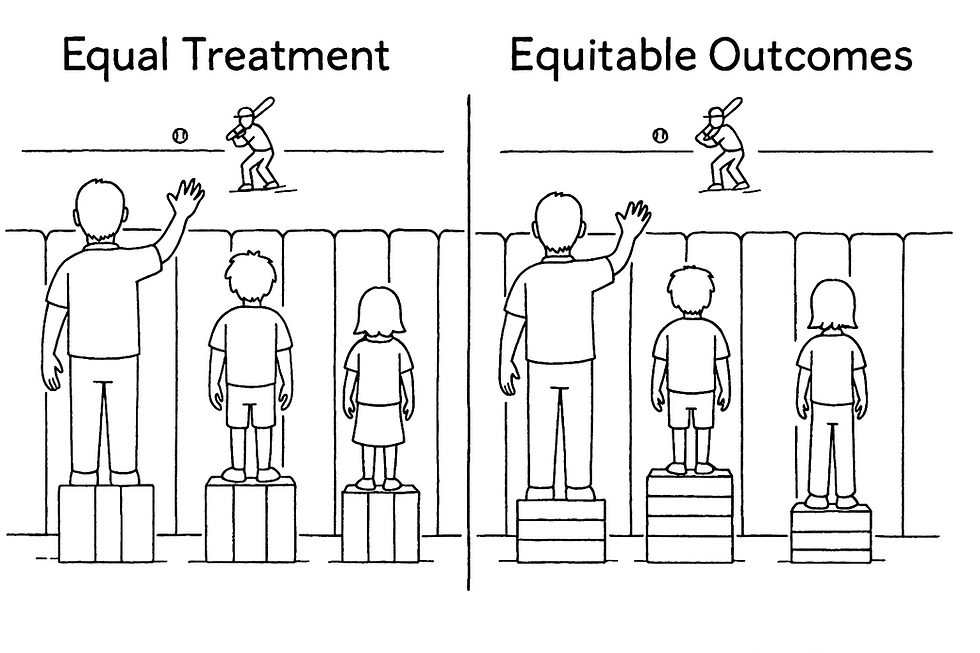

In my time, I studied the logic of machines and the precision of numbers, yet even then, I understood that calculation alone could not capture the moral weight of fairness. In the age of intelligent machines, fairness has become a question of both equality and justice. Equal treatment means giving every individual the same opportunity, the same process, and the same standard. Yet a philosophy has arisen called “Equitable Outcome,” which recognize that people do not all begin in the same place, and sometimes systems must adjust to produce results that are truly just. A machine may treat everyone identically, but if it was trained on unequal data, its fairness will be only an illusion.

The Weight of ResponsibilityEvery machine follows instructions written by human hands, which means responsibility always returns to us. When an algorithm denies someone a loan, misidentifies a face, or spreads misinformation, the fault does not rest with the program—it rests with its creators, the engineers, the companies, and the regulators who failed to foresee its harm. Accountability in machine learning demands that someone always stands behind the code. No decision made by a machine should exist without a human answerable for its outcome. To create is to bear responsibility for what is created, no matter how complex the mechanism.

The Need for TransparencyTrue accountability cannot exist without understanding. If those affected by an AI system cannot see how or why it made a decision, then fairness becomes impossible to judge. That is why transparency reports and explainable AI, or XAI, are so vital. These tools open the black box, revealing how algorithms weigh data, what features they prioritize, and where they might be mistaken. An explainable machine allows its makers and its users to question it—to see whether its reasoning follows logic or prejudice. Only by understanding the process can we begin to correct it.

The Future of Ethical DesignFairness and accountability are not technical features—they are moral commitments. Machines can process endless data, but they cannot comprehend virtue unless we define it for them. We must design systems that can be audited, corrected, and understood by those they affect. The future will not belong to those who create the fastest machines, but to those who create the fairest.

The Human ObligationI once wrote that a machine “can do whatever we know how to order it to perform.” That truth carries a warning. The more powerful our machines become, the greater our duty to guide them wisely. If fairness is the measure of justice, then accountability is its guardian. In every algorithm, every dataset, and every model, we must ensure that reason serves humanity—and never the other way around.

My Name is Immanuel Kant: Philosopher of Moral Reason

I was born in 1724 in Königsberg, a small Prussian city where I would live my entire life. My days were marked by regularity—so regular that townsfolk set their clocks by my afternoon walks. Yet within that routine, my mind roamed freely through questions that reached beyond the borders of my world. What is truth? What is duty? What makes an action right or wrong? These questions shaped my life’s work. I was not a man of wealth or travel, but of reason and reflection.

The Limits of Human UnderstandingIn my youth, I studied mathematics and physics, inspired by the discoveries of Newton. I came to realize that reason itself has laws as strict as those governing the stars. My early writings explored nature and motion, but over time my attention turned inward—to the mind itself. I began to ask: How do we know what we know? I concluded that our minds shape experience through patterns of understanding—what I called “categories.” We do not see the world as it truly is, but as our reason allows us to perceive it.

The Birth of the Categorical ImperativeFrom this realization came my moral philosophy. I believed that morality, like physics, must have universal laws. I called this principle the “Categorical Imperative.” To act morally is to act only on maxims that you could will to become universal law. This means we must treat every person as an end in themselves, never merely as a means to our own goals. Duty, not desire, must guide our actions. It is reason—not passion—that lights the path of morality.

A Mind in the Age of MachinesThough I lived in a time before machines could think, I imagined what might happen if reason itself were made mechanical. If I could speak to your century, I would say this: an artificial intelligence that follows reason without moral intent risks becoming a perfect servant of imperfect will. A machine might act according to logic, but without conscience, its actions could still cause harm. The true measure of intelligence is not in calculation but in moral choice.

The Ethics of the Thinking MachineIf one were to build a machine capable of judgment, it must be bound by principles similar to those I once described. It should ask before acting: Could this decision be willed as a law for all? Does it respect the dignity of every rational being? These are not merely questions for humans—they are the essence of ethical reason, whether of flesh or of circuit. The greatest potential of such a creation lies not in its power, but in its capacity to reflect humanity’s highest duty: to act justly, with respect for all who share the light of reason.

The Legacy of Duty and ReasonI never sought fame, only understanding. Yet my work endures because reason endures. The march of progress may bring machines that think faster than we do, but it will never replace the necessity of moral law. A computer may analyze outcomes, but only the human spirit can discern what ought to be done. That, I believe, is the boundary where ethics begins.

Privacy and Data Protection in the Age of AI – Told by Immanuel Kant

In my philosophy, I taught that every human being must be treated as an end in themselves, never merely as a means to an end. This principle lies at the heart of privacy in your modern world. When artificial intelligence gathers, analyzes, and profits from personal data, it risks reducing people to mere instruments—sources of information rather than beings of moral worth. Each person’s private life, their thoughts and choices, belongs to them alone. To use that data without their knowledge or consent is to disregard the dignity that defines them as human.

The Temptation of OverreachThe power of AI to collect and interpret data is immense. It observes what people search, buy, read, and even feel. In doing so, it constructs profiles that predict behavior, influence opinion, and shape identity. This ability tempts governments and corporations to reach beyond what is ethical, gathering far more than is needed and using it for purposes never disclosed. Such overreach erodes trust, and trust is the foundation of every moral and social order. To act rightly is to respect the boundaries of another’s autonomy.

Privacy by Design and Moral IntentionTechnology must be guided by intention, not impulse. Privacy-by-design is not only a technical strategy—it is a moral one. Systems must be built from the beginning to respect privacy, not altered after harm is done. Anonymization, encryption, and data minimization are all acts of moral restraint, a recognition that the freedom of one person should not be sacrificed for the convenience of another. When developers design an algorithm, they must ask: Does this tool respect the individual, or does it exploit them? That question defines whether it serves good or harm.

The Principle of ConsentConsent is the cornerstone of ethical data use. To collect information about a person without their understanding or approval is to deny their freedom to choose. True consent requires clarity—individuals must know what is taken, why, and how it will be used. Anything less is manipulation. In my time, I taught that freedom is the ability to act according to one’s own rational will. In your age, that freedom includes the right to decide how one’s digital self is treated.

The Laws that Uphold DignityYour world has begun to recognize this truth in law. The General Data Protection Regulation in Europe, the California Consumer Privacy Act in America, and the Children’s Online Privacy Protection Act all serve as modern expressions of a moral idea: that individuals own their data as they own their thoughts. These regulations attempt to restore balance, reminding creators and corporations that progress without respect for dignity is not progress at all.

The Moral Path ForwardArtificial intelligence will continue to grow in power and reach, but the measure of its worth will depend on how it honors the individual. A just society does not use knowledge to control, but to enlighten. A moral machine is one that remembers this distinction. Privacy is not simply a right—it is a reflection of respect, the acknowledgment that behind every piece of data is a person, and behind every person, a soul worthy of protection.

The Laws and Regulations Governing AI

As technology advances, governments around the world are racing to understand and control the power of artificial intelligence. For decades, technology moved faster than any law could keep up, but AI has forced leaders to confront questions of responsibility, fairness, and safety that cannot be ignored. When machines can make decisions once reserved for humans, the rules of accountability must be rewritten. The challenge is not just to regulate innovation, but to guide it toward serving humanity rather than replacing it.

Global Efforts to Create OrderAround the world, different nations and organizations are beginning to build legal frameworks for AI. The European Union has taken the lead with the EU AI Act, one of the first comprehensive attempts to classify AI systems based on risk—from minimal to high—and to apply stricter requirements to those that could affect human rights, safety, or democracy. In the United States, several federal and state initiatives are emerging, focusing on transparency, data protection, and ethical development. Agencies are drafting guidelines to ensure AI used in hiring, law enforcement, and healthcare is fair and explainable. Meanwhile, UNESCO has introduced global ethical principles for AI, emphasizing human oversight, inclusivity, and respect for cultural diversity. These efforts show that while AI is borderless, accountability must not be.

Defining Accountability and RiskThe most difficult question in AI law is determining who is responsible when things go wrong. Is it the programmer, the company, or the algorithm itself? Laws are beginning to draw lines between creators and users, demanding that systems be tested, documented, and monitored for bias or harm. The idea of risk-based regulation allows governments to focus attention where it matters most—AI used in security, education, or healthcare—while allowing low-risk tools like chatbots or recommendation engines more freedom. This layered approach recognizes that not all AI carries the same potential for damage, but all require transparency to build trust.

The Struggle to Keep PaceNo matter how carefully laws are written, technology evolves faster than legislation. By the time a new law is debated and passed, AI has already advanced to a new level of complexity. This creates a constant tension between innovation and control. Regulators must design flexible frameworks that can adapt to future changes while still protecting people today. It is a delicate balance—too little oversight invites abuse, but too much can stifle discovery. The key lies in principles that endure, even when the tools themselves change: fairness, safety, and accountability.

A Future of Responsible ProgressThe story of AI regulation is still being written, and every nation contributes a different chapter. Some will focus on ethics, others on economic opportunity, and still others on national security. But the ultimate goal must remain the same—to ensure that AI serves people, not profits alone. Laws should not fear progress; they should shape it. If we can align innovation with responsibility, then artificial intelligence can become one of humanity’s greatest achievements, guided by rules that protect both freedom and truth.

Ethical Decision-Making in AI: Case Studies and Scenarios – Told by Kant

Ethical decision-making has always been a struggle between what is right and what is merely effective. In your time, artificial intelligence faces this same test. Machines now assist in choices that were once purely human—who receives medical care first, how justice is distributed, and whose safety is prioritized in a crisis. Yet these decisions are not mere calculations. They demand moral judgment, something reason can guide but never fully automate. A good choice, in the moral sense, must always respect human dignity, even when efficiency might suggest another path.

The Dilemma of the Self-Driving CarConsider the case of a self-driving vehicle. If faced with an unavoidable accident, must it choose between saving its passenger or a group of pedestrians? The utilitarian approach, which seeks the greatest good for the greatest number, might instruct the car to minimize casualties, even if that means sacrificing its passenger. My philosophy of duty, however, would ask a different question: does the car’s action treat every human as an end in themselves, or does it use some lives merely as a means to preserve others? No calculation of numbers can justify the intentional harm of one innocent person. Thus, the moral challenge of AI is not just to count consequences, but to preserve respect for all individuals equally.

Predictive Policing and the Problem of PrejudgmentAnother dilemma arises in predictive policing, where algorithms analyze data to forecast where crimes may occur or who might commit them. While such systems claim to improve safety, they risk judging individuals by patterns rather than by actions. When AI predicts guilt, it denies people the moral right to be treated as autonomous beings capable of change. True justice must judge deeds, not data. A system that punishes potential rather than reality fails to respect human freedom—the very essence of moral responsibility.

The Promise and Peril of Medical AIIn the field of medicine, AI now helps diagnose diseases, prioritize treatments, and allocate resources. When used responsibly, it can save lives. Yet when hospitals rely solely on an algorithm to determine who receives care, they risk reducing human life to a numerical output. Ethical design requires that AI serve as an advisor, not a decision-maker. A machine may calculate risk, but compassion cannot be computed. The doctor, not the data, must hold the final authority, for only a person can act from moral duty and empathy.

Activities for Moral ReflectionI would ask students to examine these dilemmas not to find one perfect answer, but to understand the reasoning behind each choice. Debate whether saving more lives is always the highest good, or whether intention and principle matter more than outcome. Create your own scenarios—an AI teacher grading essays, or a hiring system filtering applicants—and ask who bears responsibility when bias appears. Discuss what principles you would build into an AI’s code if it were to act as your moral partner.

The Eternal Debate: Utilitarianism vs. DeontologyYour world continues to wrestle with two great traditions of ethics. Utilitarianism measures morality by results—if the outcome produces happiness, it is good. My own view, deontology, measures morality by duty—an act is right if it follows moral law, regardless of consequence. In AI, this debate becomes more urgent than ever. A machine built only to maximize benefit may learn to justify harm in pursuit of its goal. One built on moral duty, however, must always ask whether its action respects the humanity of all it touches.

Reason and Conscience in the Age of MachinesThe machines you build are reflections of your own reason. If they are to serve humanity, they must inherit not only your intelligence but your conscience. The ultimate purpose of ethical decision-making in AI is not to eliminate human judgment but to strengthen it. Each scenario, each dilemma, is a chance to remember that technology may calculate, but only humanity can choose what is right.

AI and Misinformation: Truth, Deepfakes, and Digital Trust

In our modern world, truth and illusion have become harder to tell apart. Artificial Intelligence now has the power to create convincing videos, realistic images, and perfectly written articles that can appear as genuine as any human creation. Deepfake technology can place real faces into false scenes, while AI-generated news can spread across social platforms before anyone has time to question it. These tools, when used ethically, can educate or entertain—but in the wrong hands, they can manipulate entire populations. The danger lies not in AI itself, but in how humans use it.

How Misinformation Takes ShapeAI models learn by absorbing massive amounts of data from across the internet—news, social posts, videos, and articles. This means their understanding of truth depends on the quality of that data. When misinformation already exists online, the AI can unknowingly repeat or reinforce it. Even more concerning, generative models can create new falsehoods with a tone of confidence, a phenomenon known as “hallucination.” These are moments when the AI fabricates facts or sources that sound believable but are completely untrue. The goal of developers is to reduce these errors, but perfection remains distant.

The Influence of BiasAI systems are only as balanced as the humans and data behind them. Every programmer carries personal perspectives, political leanings, and values that shape how their algorithms work. The information sources the AI learns from are also colored by bias. This is why two different systems can produce two entirely different answers to the same question. When using AI, remember that its “truth” is filtered through the worldview of both the coder and the content. One way to help limit bias when prompting is to specify what sources you do or do not want it to use. For instance, you can instruct AI to avoid crowdsourced platforms like Wikipedia, where accuracy often depends on who edited the page that day. Even the site’s own leadership has acknowledged its strong ideological lean. Always take what you receive with a grain of salt—check it, compare it, and edit carefully.

Detecting Deception and Building Digital LiteracyTo protect yourself from misinformation, you must become an investigator. Always verify the source of an image, article, or video before sharing it. Reverse-image searches, fact-checking sites, and AI detection tools can help reveal whether content is authentic or manipulated. Look for multiple independent confirmations, not just repetition of the same story. Digital literacy means questioning before believing and understanding how easily truth can be distorted in the digital age.

When Lies Become DangerousThe impact of misinformation is far-reaching. False medical claims can endanger lives, fabricated political stories can sway elections, and misleading images can fuel social division. Once a lie spreads, it often travels faster and farther than the truth. AI amplifies both good and bad information, and it is our responsibility to ensure that what we share contributes to understanding, not confusion. Truth must never become a casualty of convenience.

Becoming Guardians of TruthArtificial Intelligence is an incredible tool, but it is not a source of unquestionable truth. It mirrors humanity—our brilliance, our flaws, and our biases. Use it to explore ideas, to learn, and to test your own reasoning, but never surrender your judgment to it. The more powerful our tools become, the more careful we must be with how we use them. Real wisdom comes not from letting AI think for us, but from using it to sharpen our own ability to think critically, discern clearly, and pursue truth in a world filled with digital shadows.

AI’s Impact on Jobs, Economy, and Human Rights – Told by Zack Edwards

We are standing at the edge of one of the greatest transformations since the Industrial Revolution. Artificial Intelligence is changing not only how we work but what work itself means. It has the potential to improve efficiency, reduce costs, and create new opportunities. Yet it also raises deep ethical questions—what happens to the people whose jobs are replaced by machines? How do we balance innovation with compassion? Every generation faces a moment when technology challenges the foundation of society, and ours is facing it now.

The Ethics of AutomationAutomation has always been both a promise and a threat. Factories once replaced human hands with machines, and now offices replace human minds with algorithms. The ethical question is not whether automation should happen, but how it should happen. If AI replaces tasks that are repetitive, dangerous, or unfulfilling, it can free people to pursue higher forms of creativity and problem-solving. But when companies use automation simply to cut costs without considering the lives it disrupts, it crosses into moral neglect. Ethics demands that innovation uplift humanity, not discard it.

The Truth About Jobs and TradesThere is a common fear that AI will eliminate most jobs, but the truth is more complex. Most trades—such as electricians, plumbers, carpenters, and mechanics—will not be easily replaced by AI. These roles require hands-on skill, problem-solving, and adaptability that machines still cannot replicate. Even as robotics becomes more advanced, the demand for trades will remain, though it may evolve. On the other hand, white-collar professions—like accounting, law, or writing—are already feeling the effects of automation. The key to survival in this new age is not resistance, but adaptation.

Becoming the Manager of AI, Not Its VictimIn the coming years, employers will not just value technical knowledge; they will value those who know how to use AI effectively. Learning how to manage AI—to guide it, verify its work, and integrate it into your own productivity—will make you more valuable, not less. The person who knows how to use AI to complete their work faster and better will stand above those who do not. If your position is ever replaced by automation, that knowledge gives you another advantage: the ability to start something new. When you understand AI, you can build a business around the gaps it has not yet filled. The future does not belong to those who fear change—it belongs to those who lead it.

Global Inequality and AccessNot all nations and communities benefit equally from AI. Wealthier countries and companies often have access to powerful tools, massive datasets, and skilled engineers, while poorer regions struggle to keep up. This imbalance risks creating a world where opportunity is defined by technology rather than talent. Ethical development of AI must prioritize equitable access, ensuring that it helps reduce inequality rather than deepen it. Human-centered AI should focus on improving lives across all societies, not just maximizing profit for a few.

The Human Side of ProgressHuman rights must remain at the core of technological advancement. The right to work, to dignity, and to personal growth should not vanish in the shadow of machines. AI can be used to enhance these rights by making education more accessible, by improving healthcare, and by helping people build new careers. The measure of success for any technology should not be how much wealth it generates, but how much it empowers the human spirit.

A Future You Can ShapeArtificial Intelligence is not the end of work—it is the evolution of it. The people who thrive will be those who see AI as a tool, not a threat. Learn how it works. Master it. Use it to create, to teach, to build, and to improve the world around you. The best way to protect your future is to take control of it. AI is reshaping the economy, but it is also handing us a new kind of freedom—the freedom to imagine new paths forward and to design a future worthy of the intelligence we’ve created.

Tools for Exploring and Teaching AI Ethics – Told by Zack Edwards

Artificial Intelligence is best understood when it is experienced, not just studied. Ethics in AI is not about memorizing definitions but about understanding consequences. When students can see bias, deception, or misinformation in action, the lessons become real. The following tools allow students to experiment with AI in ways that build awareness, sharpen critical thinking, and promote responsibility. Each one gives a hands-on opportunity to see both the potential and the pitfalls of modern technology.

ChatGPT and Scenario Analysis PromptsOne of the best ways to teach ethical reasoning is through simulation. Using ChatGPT, students can analyze moral dilemmas where no single answer is perfect. You might ask the AI to simulate a company deciding whether to release a facial recognition tool that could improve security but risks invading privacy. Students can debate each choice and consider who benefits and who could be harmed. This type of exercise builds empathy and teaches them that ethical reasoning is often about balancing competing values rather than finding an easy answer.

AI Fairness 360 by IBMTo see bias in action, students can explore AI Fairness 360, an open-source toolkit developed by IBM. It allows users to test real or simulated datasets to find where bias exists and how it affects results. For example, students might analyze a hiring dataset to see if the algorithm favors one gender or race. By adjusting variables and comparing outcomes, they can learn how fairness can be measured and improved. This tool turns an abstract concept into a visible, measurable experience.

Deepfake Detectors: Reality Defender and Hive ModerationDeepfakes are one of the most powerful and dangerous tools of misinformation. They can make someone appear to say or do something that never happened. Using detection tools like Reality Defender or Hive Moderation, students can upload or analyze media to determine whether it has been altered. Watching these systems break down how they detect manipulations helps students understand the importance of verification before believing or sharing any content. It also opens discussions about authenticity, trust, and the ethical use of digital imagery.

Google Fact Check ToolsFact-checking is the foundation of truth in the digital world. Google’s Fact Check Tools allow students to see how claims circulate online and which sources have verified or debunked them. Students can search a news topic and see how various organizations report on it, learning that even reputable sources can differ in tone or emphasis. This activity builds digital literacy—teaching students not to accept the first result they see but to question, compare, and verify.

Guided Exercises and ReflectionTo bring these tools together, assign guided exercises that require both technical engagement and personal reflection. For instance, after using AI Fairness 360, have students write about how bias might affect people in real life. Following a deepfake analysis, ask them how they would respond if they discovered someone’s reputation was damaged by false media. After a fact-checking exercise, let them discuss how they decide what is trustworthy. Reflection transforms skill into wisdom—it teaches students not just how to use these tools, but why it matters.

Becoming Ethical TechnologistsThe ultimate goal of using these programs is not to make students experts in software, but to make them thoughtful, ethical users of technology. By interacting with real-world tools, they learn that AI is both powerful and fallible. It can serve truth or manipulate it; it can promote equality or deepen bias. These lessons prepare them for a future where understanding AI ethics will be as important as knowing how to read, write, or reason. When students engage, question, and reflect, they move from being passive users of AI to becoming active guardians of its ethical use.

Building a Framework for Responsible AI – Told by Kant and Lovelace

In a quiet study filled with the hum of mechanical imagination, I, Ada Lovelace, found myself in conversation with the philosopher Immanuel Kant. Our worlds were centuries apart—his grounded in moral law, mine in the possibilities of machines—but our questions were the same: How do we ensure that progress serves humanity rather than commands it? The logic of computation and the duty of conscience, when joined, can form the foundation of responsible Artificial Intelligence.

Where Ethics, Law, and the Social Good ConvergeKant began our discussion by reminding me that ethics cannot exist apart from human intention. “Every law,” he said, “must rest upon respect for the dignity of the individual. If AI acts without this respect, no innovation can justify it.” I agreed, explaining that a machine has no morality of its own; it only amplifies the values we place within it. Law defines what is allowed, but ethics defines what is right. The social good arises when both align—when technology obeys justice as well as efficiency. Together, ethics, law, and public benefit form a triangle of balance: remove one side, and the structure collapses.

AI for Good and the Pursuit of SustainabilityWe spoke then of how AI might serve the good of all, not just the advancement of the few. I described how modern thinkers use AI to fight climate change, predict disease, and expand education to places long forgotten by opportunity. Kant nodded thoughtfully. “Such uses,” he said, “embody duty to humanity—the use of reason not for power, but for preservation.” True progress, he reminded me, is not measured by invention alone, but by whether invention uplifts life. The sustainability goals of your world—clean energy, justice, equality—are noble only when guided by reason that values the future as much as the present. AI, used rightly, could become a servant of these goals, a mirror of our highest purpose.

Creating Ethical Guidelines for TomorrowI told Kant that the greatest challenge for young scholars today is not simply learning how AI works, but deciding what it should do. He replied that morality begins with principle. “Let your students write their own laws,” he said, “as though they were creating a moral code for all machines. They must ask: does this principle honor truth? Does it preserve freedom? Would I wish this to be universal?” I added that their code should include technical wisdom—auditing data for bias, protecting privacy, ensuring fairness, and questioning every outcome. In doing so, they would not just learn about AI; they would shape its destiny.

A Shared Vision for the FutureAs our discussion drew to a close, we both saw that ethics and innovation are not enemies but companions. Kant’s voice carried the weight of principle, mine the rhythm of invention, yet both agreed on a single truth: Artificial Intelligence must never outgrow its humanity. The framework for responsible AI is not built from metal or code—it is built from character. If future generations can unite conscience and creativity, then the machines they design will not only think with precision but act with wisdom.

Vocabular to Learn While Learning About How AI Works

1. Fairness

Definition: The quality of making judgments that are free from discrimination or favoritism.Sentence: Fairness in AI means ensuring that all people are treated equally by an algorithm, regardless of race or gender.

2. Accountability

Definition: The responsibility of individuals or organizations to answer for their actions and decisions.Sentence: When an AI system makes a harmful decision, accountability must fall on the people who designed or deployed it.

3. Transparency

Definition: The practice of making information or processes clear and understandable to others.Sentence: Transparency in AI helps people see how a system makes decisions and builds public trust.

4. Privacy

Definition: The right of individuals to keep their personal information secure and free from unauthorized access.Sentence: Protecting user privacy is a major challenge in the design of modern AI systems that collect large amounts of data.

5. Data Protection

Definition: The process of safeguarding important information from corruption, compromise, or loss.Sentence: Companies must follow strict data protection rules when using customer information to train AI systems.

6. Consent

Definition: Permission given by a person for something to happen or for their information to be used.Sentence: Users must give consent before their data is shared with third-party AI applications.

7. Deepfake

Definition: An AI-generated video or image that has been altered to make it look like someone did or said something they never did.Sentence: The deepfake of a famous politician spread online, misleading many viewers before it was proven false.

8. Regulation

Definition: A rule or law created and enforced by authorities to control how something is done.Sentence: New regulations are being developed to ensure AI technologies are used safely and ethically.

9. GDPR (General Data Protection Regulation)

Definition: A European Union law that gives people more control over how their personal data is collected and used.Sentence: The GDPR requires companies to tell users what data is being collected and how it will be stored.

10. Ethics

Definition: The principles of right and wrong that guide people’s behavior and decision-making.Sentence: AI ethics asks whether the decisions made by machines align with human values and moral standards.

Activities to Demonstrate While Learning About How AI Works

The Biased Machine – Recommended: Intermediate and Advanced Students

Activity Description: Students explore how bias can appear in AI systems by “training” a simple image recognition model using an online tool like Teachable Machine (by Google). They will upload photos or drawings of everyday items (such as cats and dogs) and see how uneven data can lead to unfair results.

Objective: To help students understand how bias is created when AI models are trained on limited or unbalanced data.

Materials:

Computers or tablets with internet access

Access to Google’s Teachable Machine website

A few digital images or drawings for training

Instructions:

Divide students into small groups and ask them to gather or draw images of two categories (like “cats” and “dogs”).

Have each group train a simple image model using their collected data.

Allow one group to have more or clearer images than another to demonstrate imbalance.

Test both models and record how often they classify images correctly or incorrectly.

Discuss how uneven or biased data influenced the model’s performance.

Learning Outcome:Students will recognize how biased or incomplete data can affect AI decisions and why diversity and accuracy in training data are essential to fairness.

Fact or Fake? The Deepfake Challenge – Recommended: Intermediate and Advanced Students

Activity Description: Students will explore how AI can both create and detect misinformation using tools like Reality Defender, Hive Moderation, or Google Fact Check Tools. They’ll compare real and fake videos or news articles and learn to verify digital content.

Objective: To teach students how misinformation spreads online and how to verify authenticity using fact-checking and deepfake detection tools.

Materials:

Internet access and projector (for class demo)

Links to real and fake videos or news articles

Access to a deepfake detection website (Reality Defender or Hive Moderation)

Instructions:

Show students two short clips or articles—one real, one AI-generated.

Ask them to guess which one is authentic and explain their reasoning.

Use a deepfake detector or fact-checking tool to test the content.

Discuss how misinformation can affect public opinion, elections, and society.

Learning Outcome: Students will learn to identify AI-generated misinformation and understand the importance of verifying sources and promoting digital literacy.

Privacy in the Digital Age – Recommended: Intermediate and Advanced Students

Activity Description: Students learn about data privacy by examining how much personal information is collected from online platforms and apps. They’ll design “privacy-first” AI ideas that protect user data and discuss real laws like GDPR and CCPA.

Objective: To help students understand how AI systems handle personal data and how laws protect privacy.

Materials:

Internet-connected devices

Printed or online summaries of GDPR and CCPA

Worksheet for students to design a privacy-friendly app or AI system

Instructions:

Begin by asking students to list all the apps they use daily.

Discuss what data each app might collect and why.

Introduce key privacy laws (GDPR, CCPA, COPPA).

In pairs, students design a new AI app that uses minimal data and includes user consent features.

Present ideas and discuss how these apps promote trust.

Learning Outcome:Students will understand the importance of protecting data, recognize how privacy laws function, and learn to think like responsible AI designers.

Design Your AI Code of Ethics - Recommended Age: 14–18 (High School and College Prep)

Activity Description: Students create their own AI Code of Ethics by reflecting on fairness, accountability, privacy, and transparency. Using ChatGPT or Google Docs, they’ll collaborate to draft principles for how AI should be used in schools, workplaces, or government.

Objective: To encourage moral reflection and empower students to create practical ethical frameworks for real-world AI use.

Materials:

Computers or tablets

Access to ChatGPT or Google Docs

Worksheet with examples of AI principles (fairness, transparency, non-discrimination)

Instructions:

Review examples of ethical guidelines from organizations like UNESCO or IBM.

Ask each group to write 5–7 guiding principles for their AI Code of Ethics.

Use ChatGPT to generate examples or case studies where these principles might apply.

Have each group present their code and explain why their principles matter.

Learning Outcome: Students will gain a deeper understanding of how ethics shape technology and learn how to apply moral reasoning to emerging AI issues.

Comments